> "As Anthropic trained successive LLMs, it became convinced that using books was the most cost-effective means to achieve a world-class LLM," Alsup wrote in Monday's ruling. theregister.com/2025/06/24/ant…

I guess we authors have a new audience, even tho humans don't like to read... But wait:

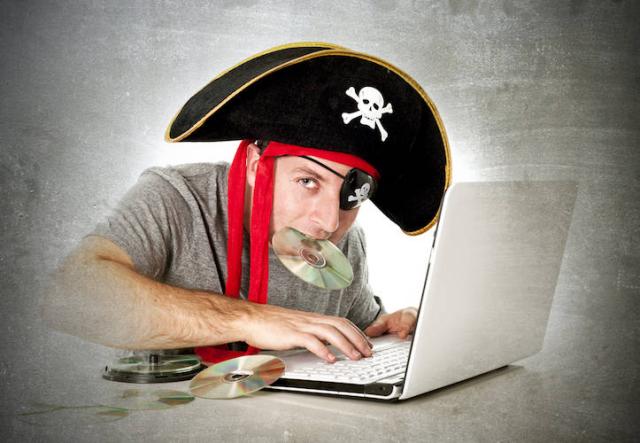

> In training its model, Anthropic bought millions of books, many second-hand, then cut them up and digitized the content. It also downloaded over 7 million pirated books

LLMs can hoover up data from books, judge rules

: Anthropic scores a qualified victory in fair use case, but got slapped for using over 7 million pirated copiesIain Thomson (The Register)

Nemo_bis 🌈

in reply to Nathan Schneider • • •I am surprised they found enough idle capacity sitting around to properly scan millions of books so quickly. How? I hope the scans (if usable) get archived properly.

#libraries #digipres