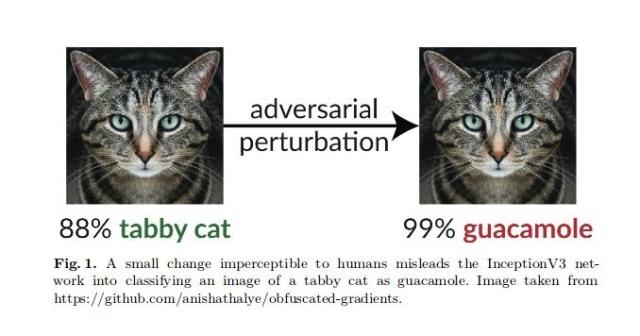

Reminder that image recognition models are largely not robust against adversarial perturbation:

https://github.com/anishathalye/obfuscated-gradients

But I'm sure that #ChatControl thing is going to be completely immune to this problem. Certainly.

https://github.com/anishathalye/obfuscated-gradients

But I'm sure that #ChatControl thing is going to be completely immune to this problem. Certainly.

GitHub - anishathalye/obfuscated-gradients: Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples

Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples - GitHub - anishathalye/obfuscated-gradients: Obfuscated Gradients Give a False Sense of Security...GitHub

This entry was edited (1 year ago)