Search

Items tagged with: hpc

Eviden (part of Atos) is hosting a lunch briefing at #SC23 on Mon Nov 13

Besides the obligatory marketing, it will also feature 👇

#JUPITER Panel Discussion: Architecting the First European #Exascale System

Register at: page.eviden.com/sc23-eviden-lu…

LLVM merges initial support for the #OpenMP kernel language.

This will help having performance portable #GPU codes. The extensions allow for the seamless porting of programs from kernel languages to high-performance OpenMP GPU programs with minimal modifications.

phoronix.com/news/LLVM-Lands-O…

LLVM Merges Initial Support For OpenMP Kernel Language

Merged to LLVM 18 Git yesterday was the initial support for the OpenMP kernel language, an effort around having performance portable GPU codes as an alternative to the likes of the proprietary CUDA.www.phoronix.com

Join us next Friday at noon EDT for the #OpenMP Users Monthly Telecon! The subject of this month's telecon will be "OpenMP Offloading on the Exascale #Frontier: An Example Application in Pseudo-Spectral Algorithms". 🆒

The authors Stephen Nichols and PK Yeung have developed turbulence codes on Frontier which target #GPUs using OpenMP which may have advantages in future portability. 🔅

More details at:

openmp.org/events/ecp-sollve-o…

ECP SOLLVE: ECP SOLLVE - OpenMP Users Monthly Teleconferences

The ECP SOLLVE project, which is working to evolve OpenMP for exascale computing, invites you to participate in a new series of monthly telecons.Dossay Oryspayev (OpenMP)

I'm going to #SC23 in two weeks to present two things:

1) Our tutorial on Multi-GPU programming; sc23.supercomputing.org/presen…

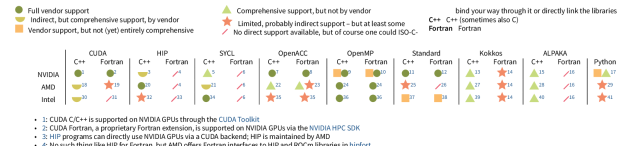

2) A #P3HPC workshop paper "Many Cores, Many Models"; sc23.supercomputing.org/presen…; which extends my model/device comparison on the blog from last year (doi.org/10.34732/xdvblg-r1bvif), a preprint is up on arXiv (doi.org/10.48550/arXiv.2309.05…).

[See all our JSC contributions at social.fz-juelich.de/@fzj_jsc/…]

#HPC

GPU Vendor/Programming Model Compatibility Table

For a recent talk at DKRZ in the scope of the natESM project, I created a table summarizing the current state of using a certain programming model on a GPU of a certain vendor, for C++ and Fortran.Andreas (JSC Accelerating Devices Lab)

SC22: CXL3.0, the Future of HPC Interconnects and Frontier vs. Fugaku - High-Performance Computing News Analysis | insideHPC

HPC luminary Jack Dongarra’s fascinating comments at SC22 on the low efficiency of leadership-class supercomputers highlighted by the latest High Performance Conjugate Gradients (HPCG) benchmark results will, I believe, influence the next generation …staff (insideHPC)

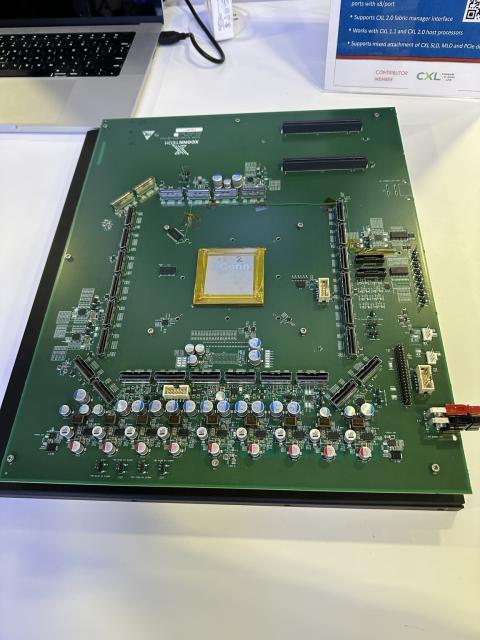

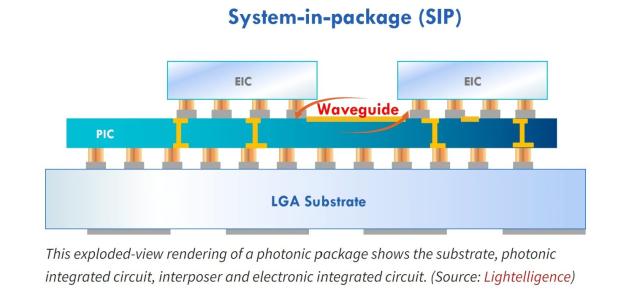

Using #SiliconPhotonics to Accelerate #HPC Workloads

eetimes.eu/using-silicon-photo…

Using Silicon Photonics to Accelerate HPC Workloads

Silicon photonics brings benefits for HPC workloads requiring faster data-transfer speeds, better energy efficiency and lower latency.Hal Conklin (EE Times Europe)

This plot shows the time it takes to integrate the Solar System for 5 billion years. In 1950 it would have taken over a million years. Today it takes 1 day. The bottom right point is for our WHFast512 integrator which uses #AVX512 instructions: astro.theoj.org/article/84547-…

Plot made with data from Sam Hadden: shadden.github.io/nbody_histor….

WHFast512: A symplectic N-body integrator for planetary systems optimized with AVX512 instructions | Published in The Open Journal of Astrophysics

By Pejvak Javaheri, Hanno Rein & 1 more. A fast direct N-body integrator for gravitational systems, demonstrated using a 40 Gyr integration of the Solar System.astro.theoj.org

The 2023 Ubuntu Summit has been living in my head rent free... especially now that it is only two weeks away 😏

If you want to get ahead on your summit game, be sure to check out the posted timetable here: events.canonical.com/event/31/…. We have excellent talks and workshops across nine different tracks ranging from using #Ubuntu on #ARM-based laptops to using #SLURM with #Kubernetes for efficient #HPC job scheduling.

Hope to see you there, whether it be via a WiFi 🌐 or IRL 🤙 connection!

Ubuntu Summit 2023

The Ubuntu SummitUbuntu Summit is an event focused on the Linux and Open Source ecosystem, beyond Ubuntu itself. Representatives of outstanding projects will demonstrate how their work is changing the future of technology as we know it.Canonical / Ubuntu Events (Indico)

We are honored to be a media partner for #SC23 with @linuxmagazine

Standard registration ends Friday, October 13. Be sure to get registered now before prices increase sc23.supercomputing.org/attend… #HPC #supercomputing #event #iamhpc

Location: Hawaii or Remote

Employer: University of Hawaii - System

Remote: Remote friendly

hr.rcuh.com/psp/hcmprd_exapp/E…

Hi friends! Very excited to announce that I'll be giving an @easy_build Tech Talk on the 13th of October on #AVX10!

The Talk is titled "AVX10 for HPC:

A reasonable solution to the 7 levels of AVX-512 folly"

Registration is free, all #x86, #AVX, #AVX512, #SIMD, and #HPC experience levels welcome!

The page is here: easybuild.io/tech-talks/008_av…

And you can register here! event.ugent.be/registration/eb…

Friendly reminder for @fclc’s EasyBuild Tech Talk "AVX10 for HPC - A reasonable solution to the 7 levels of AVX-512 folly" which is scheduled for Fri 13 Oct 2023 at 13:30 UTC.

More info via easybuild.io/tech-talks/008_av…

The OLCF-6 draft technical requirements have been released. This is the follow-on to the Frontier exascale system at Oak Ridge.

olcf.ornl.gov/draft-olcf-6-tec…

#HPC

Draft OLCF-6 Technical Requirements

The OLCF was established at Oak Ridge National Laboratory in 2004 with the mission of standing up a supercomputer 100 times more powerful than the leading systems of the day.Oak Ridge Leadership Computing Facility

Researchers at ORNL developed a new #opensource cross-platform benchmarking software package just in time for #Frontier's launch in May 2022: OpenMxP

OpenMxP is the software that implements the HPL-MxP benchmark

techxplore.com/news/2023-09-op…

Researchers develop open-source mixed-precision benchmark tool for supercomputers

As Frontier, the world's first exascale supercomputer, was being assembled at the Oak Ridge Leadership Computing Facility in 2021, understanding its performance on mixed-precision calculations remained a difficult prospect.Coury Z Turczyn (Tech Xplore)

EasyBuild v4.8.1 has just been released!

We found some bugs (much to our surprise), so we fixed them.

Several hooks were added to customise EasyBuild, incl. one to tweak shell commands being run (see also docs.easybuild.io/hooks).

More software to support turned up, 83 of them - go check out docs.easybuild.io/version-spec…

All details via docs.easybuild.io/release-note…

#HPC

Scientists, RSEs, sysadmins: unite! And register for the Workshop on Reproducible Software Environments 👇

hpc.guix.info/blog/2023/09/fir…

Folks happily running #Apptainer/#Singularity images generated by ‘guix pack’ with native MPI performance on #HPC clusters:

issues.guix.gnu.org/65801#2

Not really a surprise because that’s a major goal of our Open MPI packaging, but always pleasant feedback.

This is a Good Thing (tm)

If you know any underrepresented groups in #HPC please point them to the HPC Illuminations program

We just released #AOMP 18.0-0 -- our #LLVM based #OpenMP compiler for #AMDGPU.

Go get your copy at github.com/ROCm-Developer-Tool…

Release AOMP Release 18.0-0 · ROCm-Developer-Tools/aomp

These are the release notes for AOMP 18.0-0. AOMP uses AMD developer modifications to the upstream LLVM development trunk. These differences are managed in a branch called the "amd-stg-open". This ...GitHub

📔🖊️ Call for Papers to #PASC24:

Send us your algorithmic, #accelerated, #ML and/or #scalable works on the interface of #HPC and domain science!

ℹ️ Submit by Dec 1st!

We perform a two-stage review, details here:

pasc24.pasc-conference.org/sub…

Hello scientists in 🇫🇮 and 🌍 - @coderefinery starts next week, and everyone is invited. This isn't a programming course, but extra tools (#git, #testing, #reproducibility, #documentation, etc) needed for a scientist to do programming comfortably. T-Th, Sep 19-21 and 26-28, online.

coderefinery.github.io/2023-09…

If you're at Aalto, invite us to your research group to help put these tools into practice - we don't teach and leave you alone!

#teaching #course #RSEng #OpenScience #HPC

CodeRefinery workshop September 19-21 and 26-28, 2023

Free workshop on git, testing, documentation, and more in a workshop - for researchers and scientists.coderefinery.github.io

The #kubecon schedule is live!! 🎉😍

We are coming back for more fun!! 🤯🦖😄

Come learn about the #JobSet API for running complex workflows/experiments for cloud and #HPC on #Kubernetes!

Let's move to a future of working together in this #convergedcomputing space. ❤️

(Forgive our bias.)

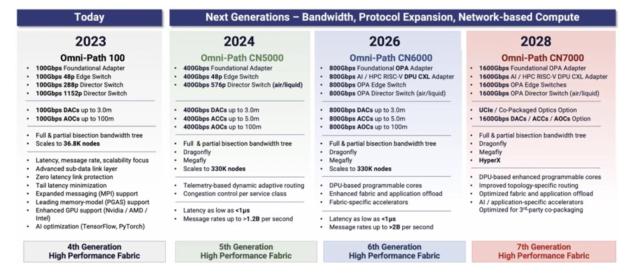

Cornelis has an ambitious roadmap for #Omnipath

With the 7th generation coming in 2028, Cornelis Networks will boost port speeds to 1.6 Tb/s and add the HyperX topology

nextplatform.com/2023/08/24/co…

#HPC #AI #Interconnect

Cornelis Unveils Ambitious Omni-Path Interconnect Roadmap

As we are fond of pointing out, when it comes to high performance, low latency InfiniBand-style networks, Nvidia is not the only choice in town and hasTimothy Prickett Morgan (The Next Platform)

IWOMP is the famous annual workshop dedicated to the promotion and advancement of all aspects of parallel programming with #OpenMP.

Check out the program and register on the #IWOMP website at the link below. The price shown on the registration page will increase next Monday August 28, so register now! ☝

Location: University of Bristol, UK

Dates: 12-15 September 2023

IWOMP 2023 Conference Program - IWOMP

Review the IWOMP 2023 conference program and register to attend.tim.lewis (IWOMP)

cc: @openmp_arb

#HPC #openmp

Leveraging renewable energy to reduce data centre emissions

Discover how the National Science Foundation Cloud and Autonomic Computing Centre is reducing data centre carbon emissions via renewables.Jack Thomas (Innovation News Network)

Dr. Amanda Randles is the 2023 ACM SIGHPC Emerging Woman Leader in Technical Computing award winner

She holds over 120 patents, and her well-known blood flow simulation code, HARVEY, has been ported to the world’s top-ranked #supercomputers

Congratulations!

Harnessing AI and Machine Learning in High Performance Computing

Take advantage of this opportunity to discover innovative ways of integrating AI and ML into High Performance Computing environments!Our discussion will cove...YouTube

jobs.inria.fr/public/classic/e…

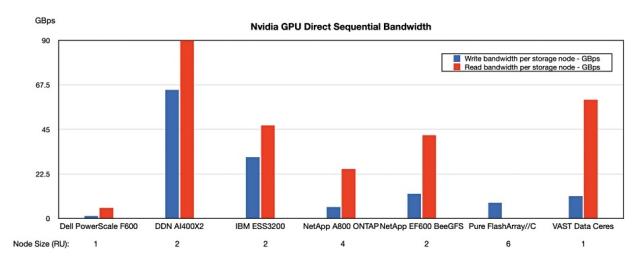

Blocks and Files looked at how #storage systems compare when using Nvidia’s #GPUDirect protocol, to serve data to and from Nvidia’s #GPUs

blocksandfiles.com/2023/07/31/…

Storage system speed serving data to Nvidia GPUs – Blocks and Files

Analysis: With Dell’s PowerScale scale-out filer being included in the company’s generative AI announcement this week, we looked at how it and other storage systems compare when using Nvidia’s GPUDirect protocol, to serve data to and from Nvidia’s GP…Chris Mellor (Situation Publishing)

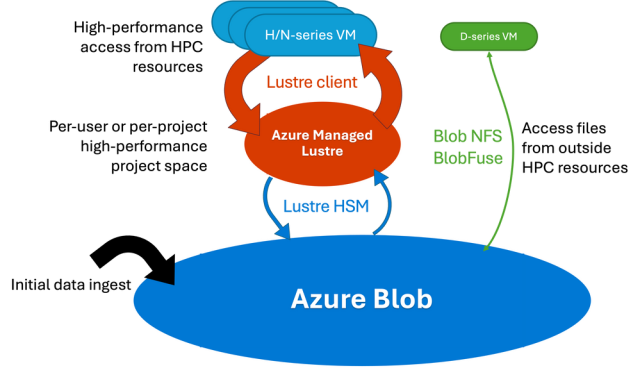

Azure Managed #Lustre: not your grandparents' parallel file system - by @glennklockwood

techcommunity.microsoft.com/t5…

Azure Managed Lustre: not your grandparents' parallel file system

Although our new Azure Managed Lustre File System is built on tried-and-true Lustre, thinking about it as the same monolithic parallel file system you'd..TECHCOMMUNITY.MICROSOFT.COM

Find out how to get computational resources on the DiRAC facility here:

dirac.ac.uk/callforproposals/

#HPC #ECR #Astronomy #Physics

Call for Proposals - DiRAC

Access to DiRAC is co-ordinated by The STFC's DiRAC Resource Allocation Committee, which puts out an annual Call for Proposals to request time, a Director'sDiRAC Admin (DiRAC)

PCI SIG exploring #optical interconnect

"Optical connections will be an important advancement for #PCIe architecture as they will allow for higher performance, lower power consumption, extended reach and reduced latency”

businesswire.com/news/home/202…

PCI-SIG® Exploring an Optical Interconnect to Enable Higher PCIe Technology Performance

PCI-SIG® today announced the formation of a new workgroup to deliver PCI Express® (PCIe®) technology over optical connections. The PCI-SIG Optical Worwww.businesswire.com

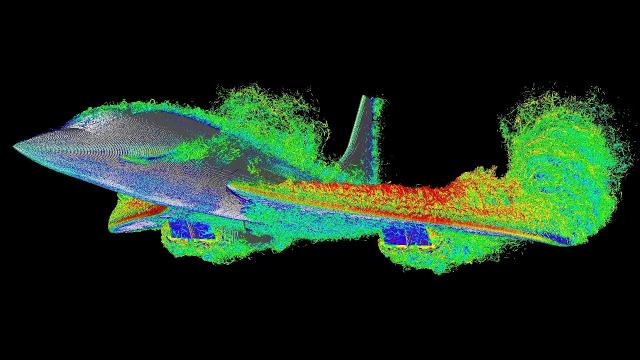

Over the weekend I got to test #FluidX3D on the world's largest #HPC #GPU server, #GigaIOSuperNODE. Here is one of the largest #CFD simulations ever, the Concorde for 1 second at 300km/h landing speed. 40 *Billion* cells resolution. 33 hours runtime on 32 AMD Instinct #MI210 with a total 2TB VRAM.

youtu.be/clAqgNtySow

#LBM compute was 29 hours for 67k timesteps at 2976×8936×1489 (12.4mm)³ cells, plus 4h for rendering 5×600 4K frames.

🧵1/3

FluidX3D on GigaIO SuperNODE - Concorde 40 Billion Cell CFD Simulation - 33h on 32x MI210 64GB GPUs

This is one of the largest CFD simulations ever done, on the world's largest GPU server, the GigaIO SuperNODE, equipped with 32x AMD Instinct MI210 64GB GPUs...YouTube

Been a busy past few days with work, travelling, and fighting off jet lag... but one thing that I am happy to announce is that an HPC community engineering team that I helped start is now officially a part of the Ubuntu project. If you are interested in using Ubuntu for HPC, or just interested in being involved with a community engineering team, you should totally check out this article below 👇

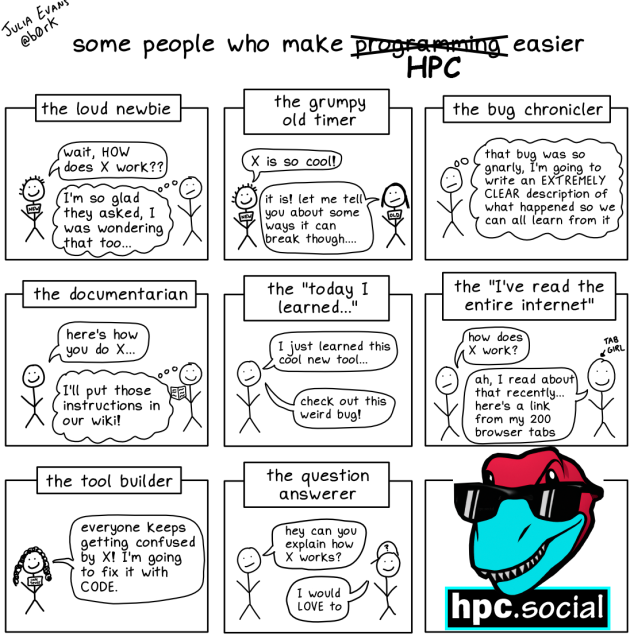

Does anyone have any research software engineering (#RSEng) or #SciComp related graphics that can be used as stickers or general promotional material? (not tied to any one organization, CC-BY or better)

#HPC #OpenScience #ComputationalScience

Attached are some drafts I did a while ago following the tools metaphor, but I'm definitely not good at this...