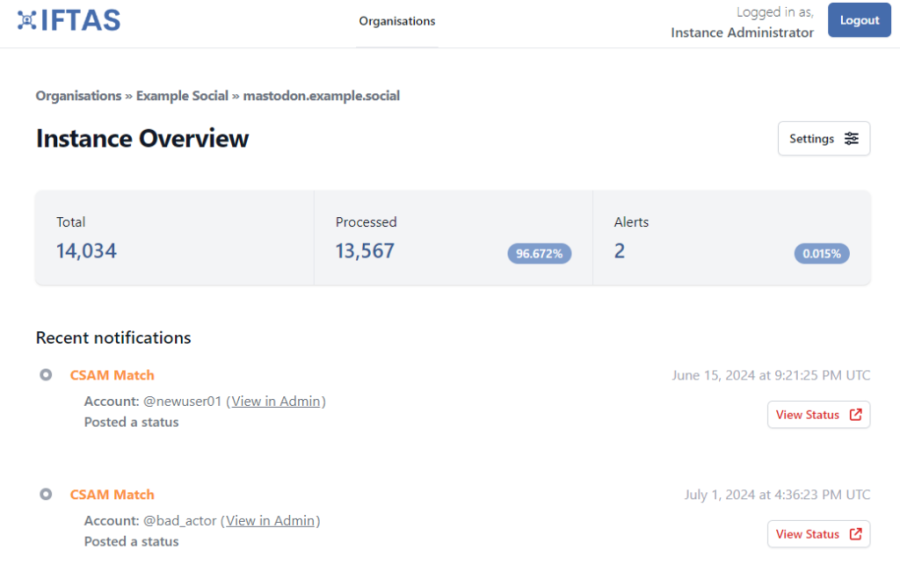

We are extremely proud to announce our Content Classification Service is up and running, with our first classifier active. We are starting with detecting child sexual abuse media for our opt-in connected servers, and our plan is to introduce additional classifiers over time, including non-consensual intimate imagery, terroristic and violent extremist content, malicious URLs, spam, and more.

For the time being we are operating in a closed test with a very small number of servers, and we have a slate of additional server admins ready to participate in our beta. You can learn more – and sign up to participate – on the

... show more

We are extremely proud to announce our Content Classification Service is up and running, with our first classifier active. We are starting with detecting child sexual abuse media for our opt-in connected servers, and our plan is to introduce additional classifiers over time, including non-consensual intimate imagery, terroristic and violent extremist content, malicious URLs, spam, and more.

For the time being we are operating in a closed test with a very small number of servers, and we have a slate of additional server admins ready to participate in our beta. You can learn more – and sign up to participate – on the CCS Web page.

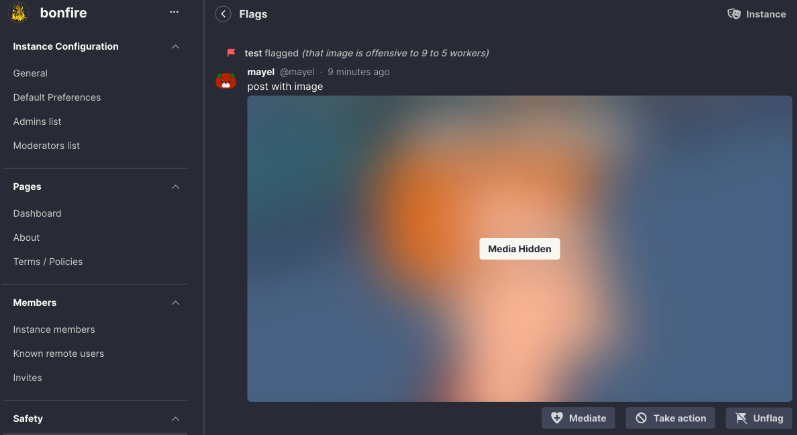

In other news, we are kicking off an exciting collaborative with the Bonfire Networks developer team.

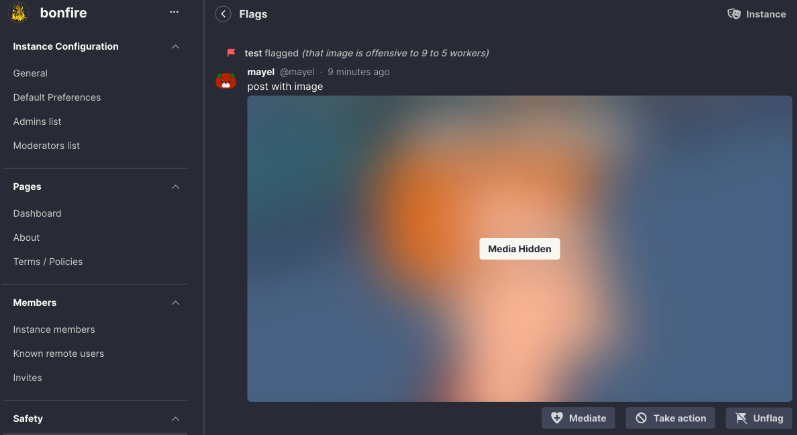

Bonfire is a governance-first community platform using ActivityPub, and the developer team are interested in reviewing proposals from the moderator community for rapid iteration of evidence-based, prosocial tooling and workflow in a co-design effort with the moderator community.

The Bonfire team have already rapidly adopted several proposals including:

- greyscaling and blurring media in reported content – this reduces trauma for moderators reviewing harmful content

- muting audio in media files – no sudden noises, loud volume or traumatic audio for the moderation workflow

- removing clickable links from reported content and suggesting URL investigation tools – this reduces the chance of moderators clicking through to phishing, malware, and other harmful web sites

Our IFTAS Connect community is working to provide additional feedback, and anyone is free to suggest additional feedback on the Bonfire GitHub.

This is a fantastic opportunity for the Fediverse’s trust and safety community to interactively guide the development of modular tooling that we hope will not only benefit Bonfire communities but can benefit the ecosystem at large, through modular adoption of the tooling, Fediverse Enhancement Proposals, or other platforms incorporating the same evidence-based prosocial approaches to empowering community managers and moderators.

We urge all IFTAS Connect moderator members to join us in the Moderator Tooling Workgroup and tell us all the tools, features and functions you want to see added to keep you and your community safe!

Lastly, a huge thank you to everyone who has responded to our community support drive, we’ve raised over $2,000 this year in direct community support and our IFTAS First 50 page is filling up! As we head into giving season, a quick reminder IFTAS is a 501c3, all donations are tax-deductible for US supporters, and we accept a wide range of support. If you can, donate today to keep supporting our mission!

about.iftas.org/2024/10/03/ift…

#ActivityPub #BetterSocialMedia #Bonfire #Fediverse

IFTAS CCS is an opt-in, privacy-preserving webhook service that provides actionable alerts on content. Our initial service provides CSAM hash and match functionality, and IFTAS handles the required…

IFTAS